Neural representation of value

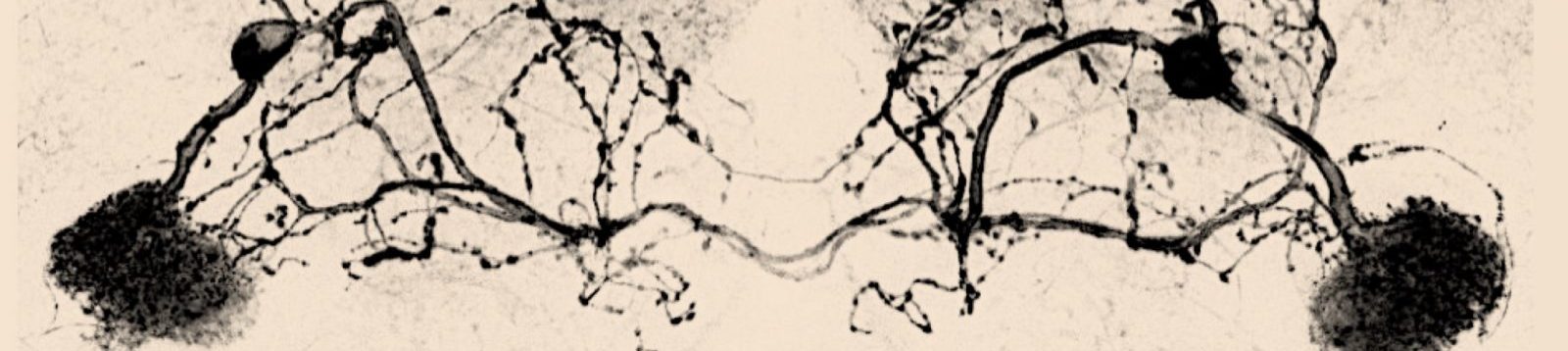

One of my primary interests is to decipher the neural code that represents value information in the fly brain. This information is essential to guide correct value-based decisions. We previously showed that multiple types of reward and punishment are relayed by specific sets of dopaminergic neurons teaching positive or negative value signal during associative learning. Here, we aim at examining the neural circuits that represent value information that these dopaminergic neurons integrate to generate value signal.

Relative value coding

During associative learning, absolute (good or bad) and/or relative (better or worse than) values can be assigned to each experience and can be later used to guide appropriate behaviour. My previous work showed that reward dopaminergic neurons are critical to compare aversive experiences and to assign aversive relative value to stimuli during associative learning (Perisse et al., 2013). Well- established and recent work proposed that appetitive and aversive systems interaction are critical for signalling absolute value to stimuli during learning and for re-evaluation of learned information. Surprisingly, how these systems interact and operate at the circuit level to encode relative value is still unknown. We therefore study the neural circuits and mechanisms involved in comparing learned value information/experience during learning necessary to generate relative value signal that is assigned to sensory stimuli (here odours) during learning.

Value-based decisions

To survive, animals often need to compare and make choices between several options based on the value assigned to each option during past experience. How flies use learned value information to select the appropriate action remains unclear. We previously identified specific neural circuits driving learned approach and avoidance within the mushroom body, a central brain structure involved in learning and memory and goal-directed behaviour. We aim at understanding the neural mechanisms underlying the representation of learned absolute and/or relative value necessary to select appropriate action during value-based choices.